Using the LS estimator (6.5) together with X and y ˜, the average (over 10 different realizations) Euclidean distance of the obtained estimate θ ˆ from the true one is equal to ‖ θ ˆ − θ o ‖ = 0.0125. Using the generated y ˜, X, X ˜ and pretending that we do not know θ o, the following three estimates are obtained for its value. X ˜ = X + E, where E is filled randomly with elements by drawing samples from N ( 0, 0.2 ). In the sequel, we generate the vector θ o ∈ R 90 by randomly drawing samples also from the normalized Gaussian. To this end, we generate randomly an input matrix, X ∈ R 150 × 90, filling it with elements according to the normalized Gaussian, N ( 0, 1 ). To demonstrate the potential of the TLS to improve upon the performance of the LS estimator, in this example, we use noise not only in the input but also in the output samples. TLS has widely been used in a number of applications, such as computer vision, system identification, speech and image processing, and spectral analysis. A recursive scheme for the efficient solution of the TLS task has appeared in. A distributed algorithm for solving the TLS task in ad hoc sensor networks has been proposed in. For further reading, the interested reader can look at and the references therein.

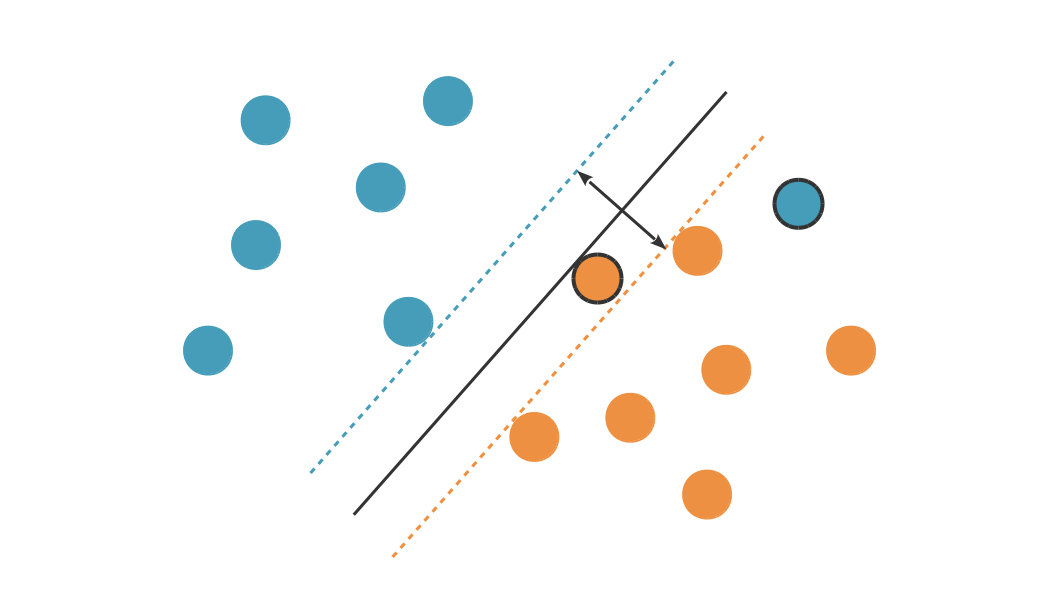

The TLS method has also been extended to deal with the more general case where y and θ become matrices. Furthermore, extensions of TLS that employ other cost functions, in order to address the presence of outliers, have also been proposed. Robustness of the TLS can be improved via the use of regularization. The matrix to be inverted for the TLS solution is more ill-conditioned than its LS counterpart. From a numerical point of view, this can also be verified by (6.80). This could be seen as a “deregularizing” tendency of the TLS. Looking at it more carefully, TLS promotes vectors of larger norm. This is basically a normalized (weighted) version of the LS cost. This is not true in general for two closed sets. Also, if one set is compact and the other closed, then K − L is closed. A separating hyperplane exists for the sets “I” and “ W” if and only if can be (strongly) separated. In this function, a T x−b is nonpositive on “I” and nonnegative on W. Then Ǝ a ≠ 0 and b such that a T x ≤ b for all x ∈ C and a T x ≥ b for all x ∈ W. The input image set represented as I is the collection of angle-oriented clusters of input images “ W” has the collection of those database image set. Assume that “ W” and “ I” are two convex sets that do not intersect, i.e., I∩ W = ∅. It is already known that separate convex sets that does not intersect are used to designate the use of hyperplane. The hyperplane now moves the image that is having minimum distance by rotating either clockwise or anticlockwise to the database. Now, when a user passes a query image as an input then the hyperplane calculates the similarity among the images of the query and images of the database based on Tanimoto distance. The database “I” already consists of feature-extracted images. Then classification technique is applied on these input images i.e., image can be rotated into clockwise or anticlockwise and measures the feature extraction of each image which are stored in the local database “W”.

Initially, the input images that have been taken are captured from input devices (user request image). Hyperplane of angle-oriented image recognition.

#Hyperplan separateur update

Future work to be undertaken accordingly includes developing a framework not only automatically update classifiers, but also monitor and measure the progressive changes of the process, in order to detect abnormal process behaviours related to drifting terms.įigure 8.5. Dealing with effects of missing and outlier samples on the mentioned methods should be investigated in another study.

In this study, it is supposed that there are no missing or outlier samples in datasets for training, testing and incremental learning of the classifier. HD-SVM by improving mechanism of selecting samples covers weakness of TIL for keeping information. It has shown, using HD-SVM reduce exceptionally the training time of the classifier compared with NIL (1/10), while increases the accuracy of the classifier (1.1 %), compared with TIL. In this study HD-SVM algorithm is implemented and comparison of HD-SVM, TIL and NIL is done for process FDD. By considering these samples, losing of information by discarding samples significantly reduces.

#Hyperplan separateur plus

In HD-SVM incremental learning algorithm, plus samples violate KTT conditions, samples which satisfy the KTT conditions are added into incremental learning. Antonio Espuña, in Computer Aided Chemical Engineering, 2016 4 Conclusions

0 kommentar(er)

0 kommentar(er)